The promise is seductive: precision diagnostics, personalized treatment plans, reduced physician burnout, and streamlined care delivery. But what happens when artificial intelligence—designed to solve—starts to fragment trust, amplify bias, and reshape the very nature of the doctor-patient relationship?

Artificial intelligence is no longer a futuristic buzzword—it’s a clinical reality. From radiology and pathology to mental health triage and chronic disease management, AI is already making decisions that affect diagnosis, resource allocation, and outcomes. According to a 2024 McKinsey report, nearly 60% of hospital systems in the U.S. are actively piloting or deploying AI-based tools in some part of their care delivery model.

While the benefits are real—faster imaging analysis, early anomaly detection, and administrative relief—the deeper implications are less discussed. And therein lies the risk: second- and third-order consequences that unfold slowly, quietly, and structurally.

Second-Order Effects: Beyond the Clinic

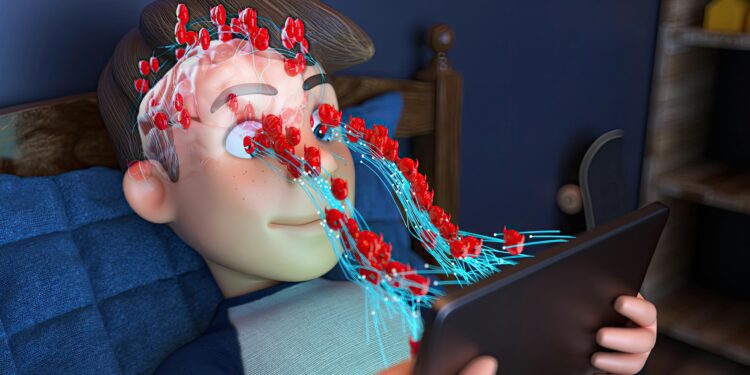

One of the most profound second-order effects of AI integration is its impact on clinical judgment and medical education. As algorithms increasingly guide diagnostic and therapeutic pathways, there’s a growing concern that clinicians may defer to the machine—gradually eroding their own diagnostic muscle.

Consider sepsis prediction tools, which now outperform human physicians in some hospital systems. As reliance grows, training pathways may prioritize AI interpretation over foundational physiology. Over time, this could produce a generation of healthcare providers fluent in technology but less adept at critical reasoning, particularly in atypical cases where algorithmic logic breaks down.

Another second-order effect is the institutionalization of bias. Algorithms trained on historical data inherit historical inequities. A 2019 study published in Science found that one widely used healthcare risk algorithm underestimated the health needs of Black patients by more than 40%, primarily because it used healthcare costs as a proxy for health status.

Such structural blind spots don’t just persist—they scale. Once embedded into health systems, biased algorithms become infrastructural. They inform clinical decisions, resource allocation, and population health strategies, often invisibly.

Third-Order Effects: Cultural and Ethical Drift

If second-order effects reshape systems, third-order effects alter cultural norms and ethical expectations.

For instance, what does “informed consent” look like in an era when treatment recommendations are generated by black-box algorithms? Patients may not know—or be told—that their diagnosis was aided by a machine learning model trained on data they never opted to share.

Trust becomes transactional. As patients learn that AI plays a growing role in their care, will they trust the process more—or less? Particularly in marginalized communities, where medical mistrust is historically rooted, the idea that “the computer decided” may intensify alienation rather than mitigate it.

There’s also a philosophical cost: What happens to empathy in the age of automation? Medicine is not just about answers, but the manner in which they are delivered. Algorithms, however precise, cannot hold space for grief, hope, or ambiguity. If AI becomes the front line of care, will that nuance be lost—or will clinicians be liberated to reclaim it?

The Missing Oversight Framework

Despite widespread adoption, there is no universal regulatory body overseeing AI in clinical contexts. The FDA has begun developing frameworks, but most algorithms are still evaluated in closed environments, with limited transparency about training data, biases, or errors.

Moreover, many AI vendors classify their models as proprietary, shielding them from scrutiny. This black-box opacity makes it difficult for clinicians to assess when and how to challenge machine-generated recommendations.

Equity and the Digital Divide

While AI promises efficiency, it also demands data infrastructure—something many rural and underfunded clinics lack. As AI models rely increasingly on real-time data inputs (from wearables, EHRs, etc.), resource-poor systems may be excluded from benefits altogether, widening the digital divide in healthcare.

Furthermore, linguistic, cultural, and disability-related variables are often underrepresented in AI training sets. A speech recognition tool that fails to understand regional dialects or an imaging model that performs poorly on non-white skin tones doesn’t just fail—it discriminates.

Redesigning AI with Humans in Mind

Rather than viewing AI as a replacement for clinicians, we must frame it as an augmentation tool—one that requires human-in-the-loop design.

That means:

- Transparent models that can explain their reasoning in plain language

- Bias audits as a requirement before deployment

- Dynamic learning systems that adapt with new, representative data

- Ethics committees at the hospital level to evaluate when and how AI is used

More radically, it means recognizing that not all efficiencies are worth the ethical trade-offs. Speed is not always synonymous with quality, and precision must not come at the cost of trust.

Conclusion: The Future Is Not (Just) Algorithmic

AI will not ruin medicine—but it will change it. Whether that change is emancipatory or extractive depends on choices we make now: about regulation, equity, training, and transparency.

We must treat AI not as a neutral tool, but as a cultural force—one that reshapes norms, expectations, and institutions. It is time for clinicians, patients, ethicists, and technologists to co-author this future—before the algorithm writes it alone.