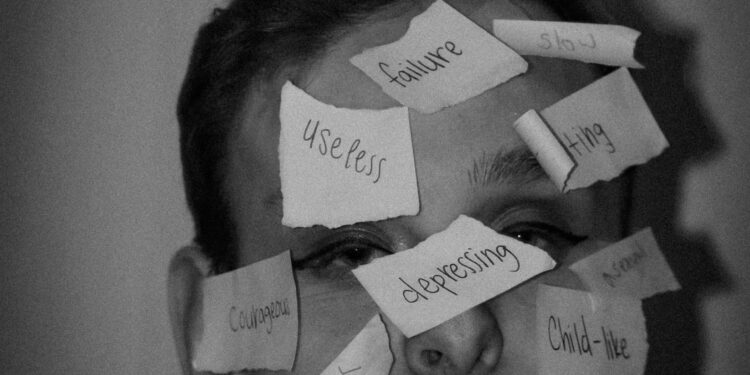

It begins with a swipe. A young person, overwhelmed by school, relationships, or isolation, opens TikTok looking for comfort. Instead, they find confusion, self-diagnosis, and pseudo-psychology masked in pastel fonts and reassuring music.

In what may be one of the most consequential digital developments in modern mental health, platforms like TikTok have become primary sources of information for millions seeking psychological support. Under the hashtag #mentalhealthtips, users can find hundreds of thousands of videos purporting to explain depression, ADHD, anxiety, borderline personality disorder, and more—all often condensed into digestible, 60-second clips.

But according to a recent analysis by the data science firm TTP, over half of the top 100 videos under that very hashtag contain false or misleading information. These include oversimplified symptoms, inaccurate diagnostic criteria, and unvetted “coping strategies” that may, at best, do nothing—or at worst, cause harm.

The Allure of the Quick Fix: Why TikTok Became a Digital Therapist

Why are so many users turning to TikTok for answers about their mental health? The platform’s algorithm is uniquely tailored to personal preference and emotional resonance. TikTok delivers content that “feels” right—even when it isn’t. It encourages the kind of personal storytelling and vulnerability that make mental health videos feel intimate and credible.

According to Pew Research, nearly 95% of U.S. teens use YouTube and TikTok, and a significant portion use it for self-education on sensitive topics like anxiety and depression. In the absence of affordable, accessible, and timely mental health services, TikTok becomes the proxy therapist.

But unlike real therapists, TikTok influencers are not always bound by evidence, ethical standards, or licensing boards.

The Risks of Misinformation: From Diagnosis to Distortion

One of the most pervasive issues is the trend of “DIY diagnosis.” Influencers list symptoms that are often cherry-picked or exaggerated for engagement. “If you hate loud noises and overthink every social interaction, you might have autism,” one trending video claims. Others present symptoms of ADHD, anxiety, or depression in ways that flatten complex clinical profiles into vague behavioral traits.

Dr. Miriam Cross, a clinical psychologist at Columbia University Medical Center, warns that such trends can lead to a dangerous paradox: “People either self-diagnose and avoid treatment, or they assume they have a condition based on incomplete information and pursue treatments that may not be appropriate.”

Even more troubling is the platform’s role in exacerbating stigma. Misrepresentation of disorders like borderline personality disorder or dissociative identity disorder often leads to sensationalized portrayals that reinforce misunderstanding rather than empathy.

TikTok’s Response: Collaborations, Not Censorship

To its credit, TikTok has begun partnering with reputable health organizations, including the National Alliance on Mental Illness (NAMI) and Crisis Text Line, to insert prompts, resources, and content moderation measures. Users searching mental health terms may encounter curated guides or referrals to professional help.

Still, critics argue that these efforts fall short. As long as algorithmic engagement rewards sensationalism and oversimplification, the incentive structure is misaligned with public health.

Content Moderation or Content Education?

What, then, is the path forward? Banning content outright may backfire, further driving misinformation to fringe platforms. Instead, some experts advocate for a layered strategy:

- Platform-Level Interventions – Enhance algorithms to deprioritize content flagged by professionals as misleading or dangerous, while promoting verified creators and licensed professionals.

- Educational Partnerships – Integrate dynamic educational overlays or real-time myth-busting with content that uses popular hashtags like #mentalhealthtips.

- Creator Accountability – Encourage health influencers to disclose credentials or cite evidence-based sources, akin to how financial content is increasingly regulated for accuracy.

- Public Literacy Campaigns – Launch media literacy initiatives that target young users, helping them critically assess digital health content.

These interventions need not be adversarial. They can, and must, coexist with TikTok’s core identity: a place of storytelling, creativity, and self-expression. But if that creativity leads to harm, the platform—and its users—must confront that consequence.

The Broader Implications: Misinformation as a Mental Health Barrier

This moment is about more than TikTok. It reflects a deeper structural issue: the mismatch between the demand for mental health support and the supply of credible, accessible care. TikTok misinformation flourishes not because people are gullible, but because they are underserved.

A 2022 report from Mental Health America found that over 50 million Americans experience a mental illness, yet more than half do not receive treatment. For young people, the disparity is even worse.

Until access improves—and digital literacy becomes embedded into educational curricula—we will continue to see platforms fill the vacuum left by systemic gaps.

Conclusion: The Double-Edged Algorithm

TikTok has the power to democratize conversations around mental health. But that power is double-edged. What starts as validation can become confusion. What seems like advocacy can become exploitation. And what feels like therapy can, ultimately, be a performance.

If we are to trust our digital spaces with our mental well-being, then platforms, providers, and the public must demand more transparency, accountability, and integrity. Mental health deserves more than 60 seconds.