The Clock Starts Now

At 7:14 a.m. on May 8, Commissioner Martin A. Makary strode into the FDA media room and declared, without slides or caveats, that “Generative AI is no longer an experiment but a mandate.” Overnight, a short internal memo had become federal fiat: every FDA center—drugs, biologics, devices, food, veterinary medicine, and tobacco—must switch on large-language-model (LLM) assistants by June 30, 2025 U.S. Food and Drug Administration.

The announcement electrified and unsettled Washington in equal measure. Within hours, Axios christened the initiative #FDAAI, warning that Makary’s deadline “raises as many questions as it answers” Axios. On social platforms the term “cderGPT”—a nod to the Center for Drug Evaluation and Research—trended alongside memes comparing 21-page statistical review templates to tweets condensed into Shakespearean couplets.

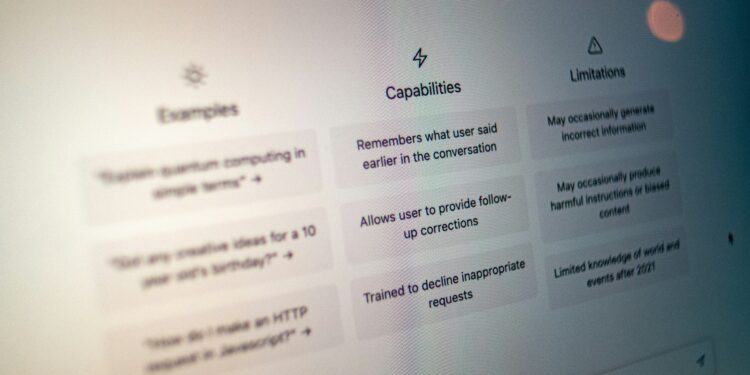

Yet behind the buzz lies a sober wager: that a technology whose hallmark is probabilistic text generation can handle the rigorous, life-and-death scrutiny the public expects from the FDA. The wager will be settled in thirty political days but could reverberate across biomedical investment horizons for thirty years.

Why a Conservative Regulator Blinked

To outsiders the FDA is staid; to insiders it is overloaded. The volume of investigational new-drug data has tripled since 2015, while real-world evidence and digital biomarker submissions multiply regulatory homework. An internal task-tracking audit in January found that reviewers spend 61 percent of their time “triaging, summarizing, or searching” rather than analyzing Becker’s Hospital Review.

Makary’s team argues that LLMs are uniquely suited to devour appendices, flag safety outliers, and pre-populate reviewer templates. “We measured a three-day task collapsing into thirteen minutes,” said Jinzhong Liu, deputy director of Drug Evaluation Sciences, when the pilot concluded in March Becker’s Hospital Review.

Cost politics matter too. Congress has balked at doubling user fees and the Government Accountability Office recently criticized the FDA’s “manual review choke-points.” A generative-AI fix promises efficiency without new headcount—a story fiscal hawks can applaud.

Inside the Pilot: Birth of cderGPT

The six-week pilot, conducted in the drug center, paired reviewers with a fine-tuned GPT-4-class model trained on decades of anonymized assessments. Engineers built a retrieval layer to isolate documents inside secure enclaves, limiting hallucinations by constraining context windows to verified PDFs.

Tasks tested:

- Executive-summary drafts of clinical and statistical reviews

- AESI (Adverse Events of Special Interest) tallies across multiple trials

- First-pass manufacturing-site inspections, highlighting missing compliance forms

- Comparative-efficacy grids against approved therapeutic alternatives

Reviewers graded outputs on accuracy, style, and “explainability.” Median factual-error rate: 2.3 percent, compared with 1.1 percent in human drafts but completed in one-tenth the time. A red-team of data scientists then injected adversarial prompts; the AI passed 94 percent of injection-resistance tests. Those numbers, Makary insists, justify scale-up Fierce Biotech.

Architecture of a Blitz Deployment

Deploying AI inside a national regulator is not as easy as flipping a cloud switch. The FDA has opted for a hub-and-spoke model:

- A central orchestration layer—cloud-agnostic, FISMA-High compliant—routes queries to specialized “sub-models” fine-tuned for drugs, devices, food pathogens, and veterinary biologics.

- Each center receives a context pack: curated corpora, ontologies, and rules engines mapping statutory references (e.g., 21 CFR Part 314).

- An audit ledger captures every prompt, completion, and human override to meet Freedom of Information Act thresholds and future court subpoenas.

- A new Chief AI Officer will sit atop the enterprise analytics division, reporting monthly to the Office of Information Management on drift, bias metrics, and uptime.

Yet even advocates concede that hard edges remain fuzzy. The press release promised “end-to-end zero-trust pipelines,” but offered no public cryptography specs Dermatology Times. Similarly, staff workshops on “prompt hygiene” have begun just as the system leaves the sandbox—an inversion of Silicon Valley’s “crawl-walk-run” ethos.

Promise: Faster Reviews, Cheaper Trials

If the rollout works, benefits accrue fast:

- New-drug speed: Modeling suggests an average six-month NDA review could shrink by 30–40 days, translating into earlier market entry and hundreds of millions in extra patent life.

- Small-company parity: Start-ups, often lacking regulatory-affairs armies, could submit cleaner dossiers if the agency publishes LLM-generated checklists.

- Patient safety: By automating real-world adverse-event scans, the FDA could surface safety signals months before current databanks flag them.

- Reviewer morale: Instead of swivel-chair drudgery, Ph.D. pharmacists might spend mornings interrogating mechanism-of-action hypotheses, not merging Excel sheets.

Wall Street has noticed. After the pilot news, the iShares Genomics & Immunology ETF ticked up 1.8 percent, and venture term sheets began citing “LLM-ready regulatory narratives” as a differentiator for Series A therapeutics.

Peril: Proprietary Data and Hallucinations

Yet the road to algorithmic governance is paved with edge cases. Pharma sponsors fear the system might inadvertently leak trade secrets across internal boundaries. Although Makary promised “strict model compartmentalization,” the FDA declined to confirm whether embeddings are entirely center-specific. In a letter to leadership, the Biotechnology Innovation Organization urged “verifiable assurances” before confidential briefings are fed to the model Axios.

Civil-society groups worry about automation bias. If reviewers accept AI-generated synopses, subtle statistical quirks could pass unnoticed. Even with an audit trail, diagnosing why a transformer model flagged—or missed—a QT-interval signal is non-trivial.

Then there is cybersecurity. The Department of Homeland Security lists the FDA as critical infrastructure. A prompt-hacking exploit that dumps queued advisory-committee notes onto the dark web could destabilize biotech markets overnight. White-hat hackers demonstrated an adversarial chain prompting attack that forced a leading commercial LLM to reveal internal system messages in March; the FDA’s in-house fork may prove safer, but no generative model is bullet-proof.

Comparative Optics: EMA, MHRA, and Beyond

Globally, regulators watch with envy and alarm. The European Medicines Agency (EMA) has taken a slower path, announcing a sandbox for language-model summarization but limiting production use until 2026. The UK’s MHRA partnered with DeepMind on label-compliance AI, yet requires synthetic rather than live dossiers during training.

If the FDA hits its June deadline without a public catastrophe, it will define the gold standard. Conversely, a breach or high-profile hallucination could embolden international skeptics and gum up harmonization talks under the ICH (International Council for Harmonisation).

Policy Cross-Currents and Legal Exposure

The rollout lands amid Beltway debates on the AI Leadership for Agencies Act, a bipartisan bill that would force every federal department to appoint chief AI officers and file annual algorithmic-risk reports. While Makary has pre-emptively created the role, a statutory mandate could empower Congress to subpoena LLM logs.

Litigation risk is uncharted. If an AI-assisted review misses a carcinogenic impurity later discovered post-market, plaintiffs could allege negligent reliance on unvalidated software. The Justice Department’s Civil Division has begun informal consultations on sovereign-immunity contours should such cases arise, according to two officials familiar with the talks.

Industry Adaptation: From Submission PDFs to JSON APIs

Sponsors are already reorganizing. Several large pharmaceutical firms have formed “LLM-readiness tiger teams” to structure trial data in machine-readable JSON so the FDA’s embeddings have less noise. Contract-research organizations advertise “prompt engineering for regulators” as a billable service.

Device makers eye similar gains. The Center for Devices and Radiological Health, chronically backlogged on 510(k) submissions, plans to feed its model radiology-imaging metadata, hoping to cut review backlogs that average 243 days AI Insider.

But the real wildcard is food safety. If generative AI can pre-screen hundreds of ingredient dossiers overnight, import alerts could issue before contaminated products hit U.S. ports. That prospect has drawn quiet applause from consumer advocates and loud grumbling from import brokers unready for machine-paced inspections.

Human Futures in a Machine-Mediated FDA

Sociologists of expertise point out that professional legitimacy rests not only on knowledge but on who wields it. Will physicians trust a label addendum authored partly by an algorithm? Will advisory-committee members demand to see the model’s chain of thought?

The FDA promises “explainable-AI overlays” that expose evidence snippets, but neural nets remain probabilistic at their core. A reviewer can interrogate the why of a logistic regression; an LLM’s attention weights often import opacity. The agency’s Artificial Intelligence Program Office will therefore train reviewers in “skeptical synergy”—treating outputs as a second opinion rather than gospel.

The Thirty-Day Litmus Test

In one month the FDA will either deliver the most audacious digital-government deployment in modern memory or will request a reprieve. Makary is betting on audacity. If he succeeds, generative AI could become as ubiquitous in regulation as PDF is today—a silent co-author of every approval. If he fails, the agency could face a congressional reorganization it has dodged since thalidomide.

Either way, the stakes transcend bureaucracy. They touch every patient waiting for a therapy, every scientist parsing raw data, and every citizen who presumes the label on a vial is the product of human discernment. Algorithms may soon stand sentinel at the gate between laboratory and bedside. Whether they guard it wisely will depend on how carefully, and how quickly, we teach them the gravity of that watch.